This is the eighteenth article in a series dedicated to the various aspects of machine learning (ML). Today’s article will discuss the problem of creating ethical agents in the field of machine learning, demonstrating why it is important for developers to think of the benefits and costs of certain agent behaviors during the development of machine learning algorithms.

Taking a midday stroll outside in fall weather can be a fun experience. Many people like to go on strolls in parks, while others prefer just a quick walk down a sidewalk, in their neighborhood or on a busy downtown street. Whatever you like best, you probably won’t be incredibly anxious about your safety on your fall stroll. Most people you see walking on the street probably won’t hurt you. Maybe it’s because humans are good at heart, or maybe it’s because laws and social norms help regulate the less friendly parts of human nature, but either way, you can have something of a cautious trust that nothing terrible will happen to you in your walk in the park.

So, you’re walking in the park, when you hear this buzzing. You look around, and see R2, Domino’s delivery robot, coming full speed ahead towards you on the narrow sidewalk you are walking down. You assume it will slow down behind you, or drive in the bumpy grass around you, but you assumed wrong. R2 runs you right over, and keeps on truckin’ like nothing ever happened. As it turns out, it has precisely 4 minutes and 32 seconds to deliver a pizza on time, and it estimated that it would take longer to navigate the bumpy grass around you then simply knock you out of its way.

A human delivery driver wouldn’t knock you over to shave ten seconds off of delivery time, at least we hope not. So why would a robot delivery driver do so? We touched on this in the last article, but the main reason is that computers are not moral beings, that is, they do not feel that any particular action is particularly wrong in the way that humans feel. In reality, ML agents only think, and what they think about is whether an action aids utility or not.

One of the scary truths about the development of AI is that an agent is not likely to reason for itself whether an action is morally permissible or not, but only reason ways in which to lower costs (memory usage, time taken, etc.) and maximize utility. So, a poker-playing AI agent with loose moral standards may blare loud music while an opponent decides whether to call or fold its raise, because this makes it more likely that the opponent will make a dumb move. It takes a human developer to ensure that such moves are not made by an AI agent.

In a machine learning agent like R2, or the poker playing agent, it can be doubly difficult to make sure that it is making the morally correct, or even just safe, choice. Such agents exist to learn over time how to best accomplish a task, and discovering that running over a pedestrian or two can make pizza delivery’s 4x more efficient.

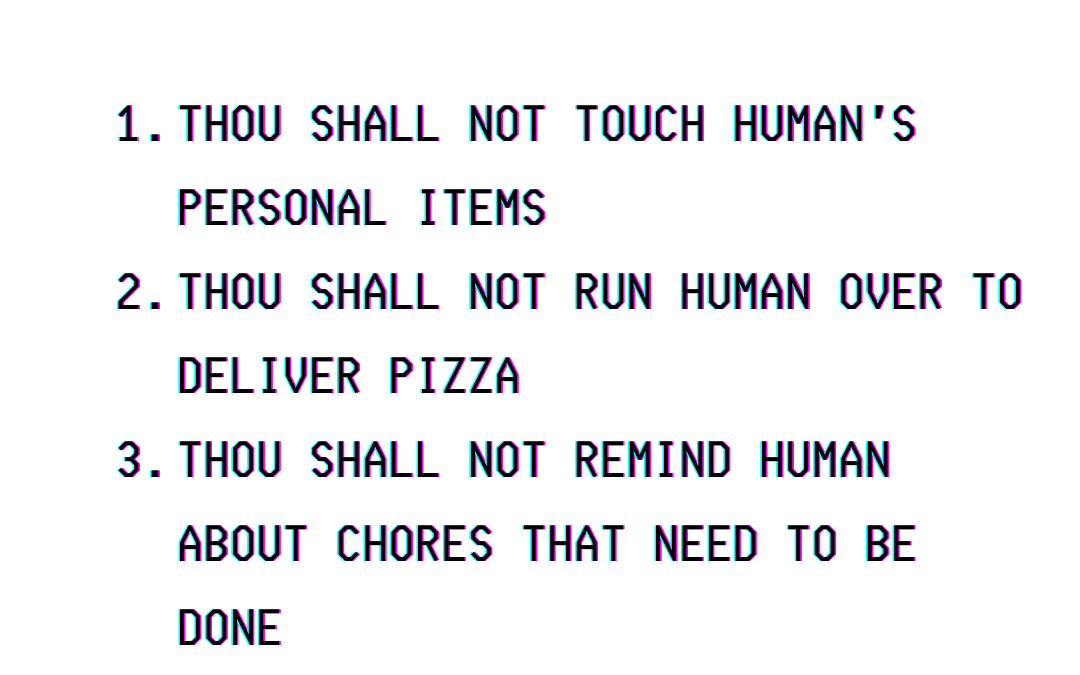

What is required is prudence on the part of developers during the training stage. For your average ML agent like R2, you can completely weed out the possibility that it will run people over during the development stage by associating physical harm (or emotional harm, if R2 is capable of such a thing) being inflicted for others does not increase utility, but rather decreases utility, no matter what it helps in terms of delivery time or efficiency.

A machine learning agent will in turn learn that there is a utility cost to certain actions, or just categories of actions like physically harming humans, and that it will weigh utility costs of certain actions against other actions. So, the overall utility cost of running over a person on a fall stroll will be higher than the utility cost of sacrificing a number of seconds, and possibly being a bit late, by driving in the bumpy grass to go around the human.The vast majority of ML agents will not reason its way out of these strongly established and emphasized utility costs, so R2 will forever understand the costly nature of running people over for the sake of delivering a pizza.

Where AI gets scary is when we bring into the picture “superintelligent” AI agents that exceed human rational ability. If some mega-rich AI enthusiast were to pour a bunch of money in a super intelligent AI agent that can find and effect the best solutions for environmental problems. It probably would not take that superintelligent AI agent too long to find out that humans are the biggest threat to the environment, and if it is incredibly dedicated to the task of preserving the earth, it may very well reason itself out of rules, established by humans, like “harming humans are bad,” and decide that making the human race extinct will ultimately maximize utility on earth, and allow all forms of non-human life to flourish without human-made environmental threats. A version of this terrifying possibility was seen in the film 2001: A Space Odyssey, where the supercomputer HAL-9000 tries to kill the crew of a spaceship when the humans threaten HAL’s mission.

However, we must stress, the vast majority of AI agents that are being developed and will be developed will not be at that level of superintelligence, so extinction-by-AI is probably not a huge likelihood for the cause of the end of humanity.

Summary

Machine-learning AI agents are not moral beings, and do not have feelings or emotions like humans do. They are cold, rational, and calculating agents focused on maximizing utility and lowering costs, so it is necessary that developers are prudent in making the agent understand that decisions involving the endangerment and/or destruction of an environment and the beings and objects it encounters are not preferable, and lower utility. Although, of course, that is a different story for AI agents that are weapons of war, but that is a topic for another time. So let us end on the note that delivery robots, self-driving cars, and pizza-slicing robot arms are not going to hurt you in order to better accomplish their tasks.

Recent Comments